Add Activepieces integration for workflow automation

- Add Activepieces fork with SmoothSchedule custom piece - Create integrations app with Activepieces service layer - Add embed token endpoint for iframe integration - Create Automations page with embedded workflow builder - Add sidebar visibility fix for embed mode - Add list inactive customers endpoint to Public API - Include SmoothSchedule triggers: event created/updated/cancelled - Include SmoothSchedule actions: create/update/cancel events, list resources/services/customers 🤖 Generated with [Claude Code](https://claude.com/claude-code) Co-Authored-By: Claude Opus 4.5 <noreply@anthropic.com>

This commit is contained in:

18

activepieces-fork/docs/install/architecture/engine.mdx

Normal file

18

activepieces-fork/docs/install/architecture/engine.mdx

Normal file

@@ -0,0 +1,18 @@

|

||||

---

|

||||

title: "Engine"

|

||||

icon: "brain"

|

||||

---

|

||||

|

||||

The Engine file contains the following types of operations:

|

||||

|

||||

- **Extract Piece Metadata**: Extracts metadata when installing new pieces.

|

||||

- **Execute Step**: Executes a single test step.

|

||||

- **Execute Flow**: Executes a flow.

|

||||

- **Execute Property**: Executes dynamic dropdowns or dynamic properties.

|

||||

- **Execute Trigger Hook**: Executes actions such as OnEnable, OnDisable, or extracting payloads.

|

||||

- **Execute Auth Validation**: Validates the authentication of the connection.

|

||||

|

||||

The engine takes the flow JSON with an engine token scoped to this project and implements the API provided for the piece framework, such as:

|

||||

- Storage Service: A simple key/value persistent store for the piece framework.

|

||||

- File Service: A helper to store files either locally or in a database, such as for testing steps.

|

||||

- Fetch Metadata: Retrieves metadata of the current running project.

|

||||

67

activepieces-fork/docs/install/architecture/overview.mdx

Normal file

67

activepieces-fork/docs/install/architecture/overview.mdx

Normal file

@@ -0,0 +1,67 @@

|

||||

---

|

||||

title: "Overview"

|

||||

description: ""

|

||||

icon: "cube"

|

||||

---

|

||||

|

||||

This page focuses on describing the main components of Activepieces and focus mainly on workflow executions.

|

||||

|

||||

## Components

|

||||

|

||||

|

||||

|

||||

**Activepieces:**

|

||||

|

||||

- **App**: The main application that organizes everything from APIs to scheduled jobs.

|

||||

- **Worker**: Polls for new jobs and executes the flows with the engine, ensuring proper sandboxing, and sends results back to the app through the API.

|

||||

- **Engine**: TypeScript code that parses flow JSON and executes it. It is compiled into a single JS file.

|

||||

- **UI**: Frontend written in React.

|

||||

|

||||

**Third Party**:

|

||||

- **Postgres**: The main database for Activepieces.

|

||||

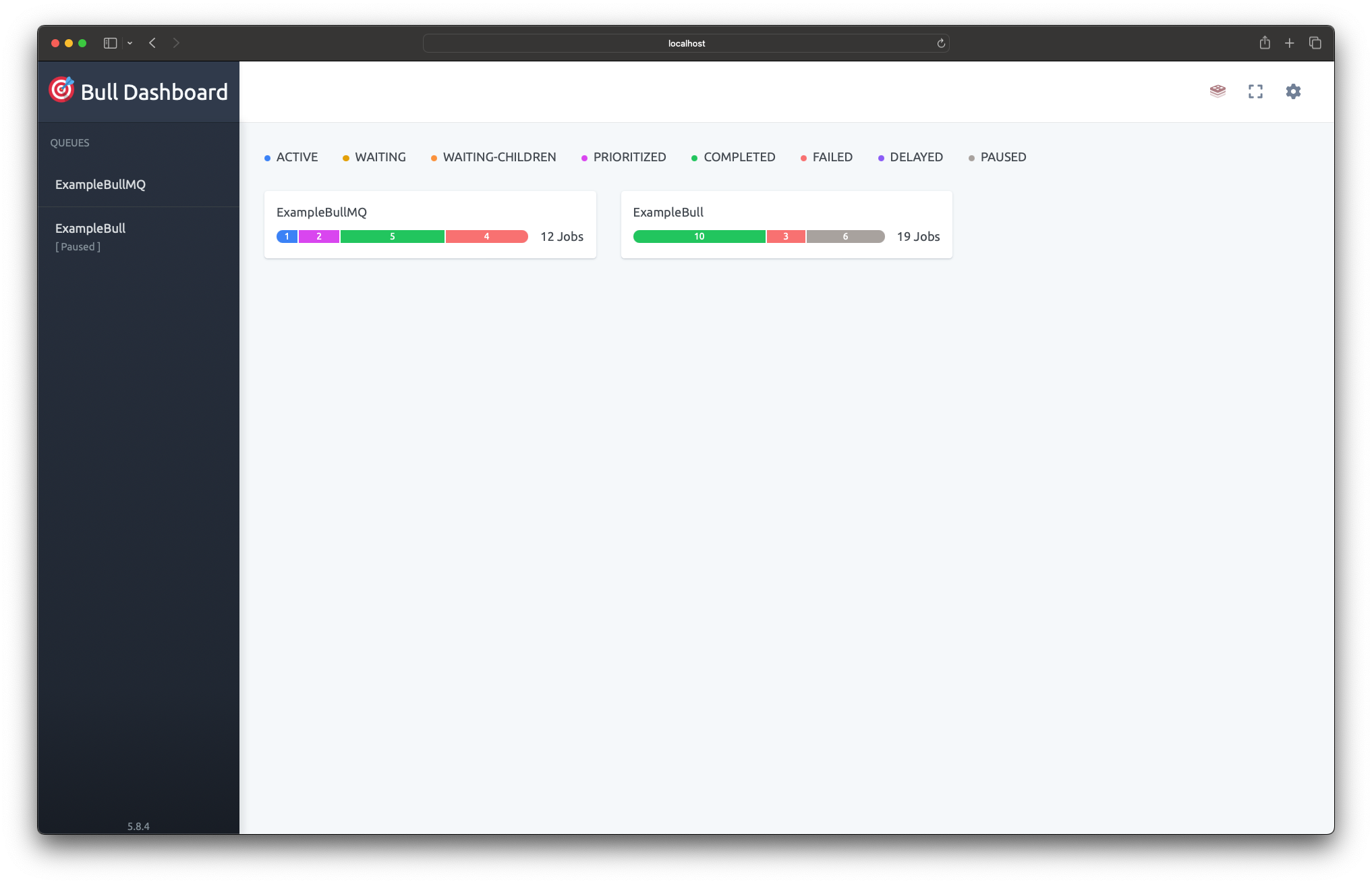

- **Redis**: This is used to power the queue using [BullMQ](https://docs.bullmq.io/).

|

||||

|

||||

## Reliability & Scalability

|

||||

|

||||

<Tip>

|

||||

Postgres and Redis availability is outside the scope of this documentation, as many cloud providers already implement best practices to ensure their availability.

|

||||

</Tip>

|

||||

|

||||

- **Webhooks**:

|

||||

All webhooks are sent to the Activepieces app, which performs basic validation and adds them to the queue. In case of a spike, webhooks will be added to the queue.

|

||||

|

||||

- **Polling Trigger**:

|

||||

All recurring jobs are added to Redis. In case of a failure, the missed jobs will be executed again.

|

||||

|

||||

- **Flow Execution**:

|

||||

Workers poll jobs from the queue. In the event of a spike, the flow execution will still work but may be delayed depending on the size of the spike.

|

||||

|

||||

To scale Activepieces, you typically need to increase the replicas of either workers, the app, or the Postgres database. A small Redis instance is sufficient as it can handle thousands of jobs per second and rarely acts as a bottleneck.

|

||||

|

||||

## Repository Structure

|

||||

|

||||

|

||||

The repository is structured as a monorepo using the NX build system, with TypeScript as the primary language. It is divided into several packages:

|

||||

|

||||

```

|

||||

.

|

||||

├── packages

|

||||

│ ├── react-ui

|

||||

│ ├── server

|

||||

| |── api

|

||||

| |── worker

|

||||

| |── shared

|

||||

| ├── ee

|

||||

│ ├── engine

|

||||

│ ├── pieces

|

||||

│ ├── shared

|

||||

```

|

||||

|

||||

- `react-ui`: This package contains the user interface, implemented using the React framework.

|

||||

- `server-api`: This package contains the main application written in TypeScript with the Fastify framework.

|

||||

- `server-worker`: This package contains the logic of accepting flow jobs and executing them using the engine.

|

||||

- `server-shared`: this package contains the shared logic between worker and app.

|

||||

- `engine`: This package contains the logic for flow execution within the sandbox.

|

||||

- `pieces`: This package contains the implementation of triggers and actions for third-party apps.

|

||||

- `shared`: This package contains shared data models and helper functions used by the other packages.

|

||||

- `ee`: This package contains features that are only available in the paid edition.

|

||||

107

activepieces-fork/docs/install/architecture/performance.mdx

Normal file

107

activepieces-fork/docs/install/architecture/performance.mdx

Normal file

@@ -0,0 +1,107 @@

|

||||

---

|

||||

title: "Benchmarking"

|

||||

icon: "chart-line"

|

||||

---

|

||||

|

||||

## Performance

|

||||

|

||||

On average, Activepieces (self-hosted) can handle 95 flow executions per second on a single instance (including PostgreSQL and Redis) with under 300ms latency.\

|

||||

It can scale up much more with increasing instance resources and/or adding more instances.\

|

||||

\

|

||||

The result of **5000** requests with concurrency of **25**

|

||||

|

||||

```

|

||||

This is ApacheBench, Version 2.3 <$Revision: 1913912 $>

|

||||

Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/

|

||||

Licensed to The Apache Software Foundation, http://www.apache.org/

|

||||

|

||||

Benchmarking localhost (be patient)

|

||||

Completed 500 requests

|

||||

Completed 1000 requests

|

||||

Completed 1500 requests

|

||||

Completed 2000 requests

|

||||

Completed 2500 requests

|

||||

Completed 3000 requests

|

||||

Completed 3500 requests

|

||||

Completed 4000 requests

|

||||

Completed 4500 requests

|

||||

Completed 5000 requests

|

||||

Finished 5000 requests

|

||||

|

||||

|

||||

Server Software:

|

||||

Server Hostname: localhost

|

||||

Server Port: 4200

|

||||

|

||||

Document Path: /api/v1/webhooks/GMtpNwDsy4mbJe3369yzy/sync

|

||||

Document Length: 16 bytes

|

||||

|

||||

Concurrency Level: 25

|

||||

Time taken for tests: 52.087 seconds

|

||||

Complete requests: 5000

|

||||

Failed requests: 0

|

||||

Total transferred: 1375000 bytes

|

||||

HTML transferred: 80000 bytes

|

||||

Requests per second: 95.99 [#/sec] (mean)

|

||||

Time per request: 260.436 [ms] (mean)

|

||||

Time per request: 10.417 [ms] (mean, across all concurrent requests)

|

||||

Transfer rate: 25.78 [Kbytes/sec] received

|

||||

|

||||

Connection Times (ms)

|

||||

min mean[+/-sd] median max

|

||||

Connect: 0 0 0.0 0 1

|

||||

Processing: 32 260 23.8 254 756

|

||||

Waiting: 31 260 23.8 254 756

|

||||

Total: 32 260 23.8 254 756

|

||||

|

||||

Percentage of the requests served within a certain time (ms)

|

||||

50% 254

|

||||

66% 261

|

||||

75% 267

|

||||

80% 272

|

||||

90% 289

|

||||

95% 306

|

||||

98% 327

|

||||

99% 337

|

||||

100% 756 (longest request)

|

||||

```

|

||||

|

||||

#### Benchmarking

|

||||

|

||||

Here is how to reproduce the benchmark:

|

||||

|

||||

1. Run Activepieces with PostgreSQL and Redis with the following environment variables:

|

||||

|

||||

```env

|

||||

AP_EXECUTION_MODE=SANDBOX_CODE_ONLY

|

||||

AP_FLOW_WORKER_CONCURRENCY=25

|

||||

```

|

||||

|

||||

2. Create a flow with a Catch Webhook trigger and a webhook Return Response action.

|

||||

|

||||

|

||||

|

||||

3. Get the webhook URL from the webhook trigger and append `/sync` to it.

|

||||

4. Install a benchmark tool like [ab](https://httpd.apache.org/docs/2.4/programs/ab.html):

|

||||

|

||||

```bash

|

||||

sudo apt-get install apache2-utils

|

||||

```

|

||||

|

||||

5. Run the benchmark:

|

||||

|

||||

```bash

|

||||

ab -c 25 -n 5000 http://localhost:4200/api/v1/webhooks/GMtpNwDsy4mbJe3369yzy/sync

|

||||

```

|

||||

|

||||

6. Check the results:

|

||||

|

||||

Instance specs used to get the above results:

|

||||

|

||||

- 16GB RAM

|

||||

- AMD Ryzen 7 8845HS (8 cores, 16 threads)

|

||||

- Ubuntu 24.04 LTS

|

||||

|

||||

<Tip>

|

||||

These benchmarks are based on running Activepieces in `SANDBOX_CODE_ONLY` mode. This does **not** represent the performance of Activepieces Cloud, which uses a different sandboxing mechanism to support multi-tenancy. For more information, see [Sandboxing](/install/architecture/workers#sandboxing).

|

||||

</Tip>

|

||||

98

activepieces-fork/docs/install/architecture/workers.mdx

Normal file

98

activepieces-fork/docs/install/architecture/workers.mdx

Normal file

@@ -0,0 +1,98 @@

|

||||

---

|

||||

title: "Workers & Sandboxing"

|

||||

icon: "gears"

|

||||

---

|

||||

|

||||

This component is responsible for polling jobs from the app, preparing the sandbox, and executing them with the engine.

|

||||

|

||||

## Jobs

|

||||

|

||||

There are three types of jobs:

|

||||

|

||||

- **Recurring Jobs**: Polling/schedule triggers jobs for active flows.

|

||||

- **Flow Jobs**: Flows that are currently being executed.

|

||||

- **Webhook Jobs**: Webhooks that still need to be ingested, as third-party webhooks can map to multiple flows or need mapping.

|

||||

|

||||

<Tip>

|

||||

This documentation will not discuss how the engine works other than stating that it takes the jobs and produces the output. Please refer to [engine](./engine) for more information.

|

||||

</Tip>

|

||||

|

||||

## Sandboxing

|

||||

|

||||

Sandbox in Activepieces means in which environment the engine will execute the flow. There are four types of sandboxes, each with different trade-offs:

|

||||

|

||||

<Snippet file="execution-mode.mdx" />

|

||||

|

||||

|

||||

|

||||

|

||||

### No Sandboxing & V8 Sandboxing

|

||||

|

||||

The difference between the two modes is in the execution of code pieces. For V8 Sandboxing, we use [isolated-vm](https://www.npmjs.com/package/isolated-vm), which relies on V8 isolation to isolate code pieces.

|

||||

|

||||

These are the steps that are used to execute the flow:

|

||||

|

||||

<Steps>

|

||||

<Step title="Prepare Code Pieces">

|

||||

If the code doesn't exist, it will be built with bun with the necessary npm packages will be prepared, if possible.

|

||||

</Step>

|

||||

<Step title="Install Pieces">

|

||||

Pieces are npm packages, we use `bun` to install the pieces.

|

||||

</Step>

|

||||

<Step title="Execution">

|

||||

There is a pool of worker threads kept warm and the engine stays running and listening. Each thread executes one engine operation and sends back the result upon completion.

|

||||

</Step>

|

||||

</Steps>

|

||||

|

||||

|

||||

#### Security:

|

||||

In a self-hosted environment, all piece installations are done by the **platform admin**. It is assumed that the pieces are secure, as they have full access to the machine.

|

||||

|

||||

Code pieces provided by the end user are isolated using V8, which restricts the user to browser JavaScript instead of Node.js with npm.

|

||||

|

||||

#### Performance

|

||||

The flow execution is fast as the javascript can be, although there is overhead in polling from queue and prepare the files first time the flow get executed.

|

||||

|

||||

#### Benchmark

|

||||

|

||||

TBD

|

||||

|

||||

### Kernel Namespaces Sandboxing

|

||||

|

||||

This consists of two steps: the first one is preparing the sandbox, and the other one is the execution part.

|

||||

|

||||

#### Prepare the folder

|

||||

|

||||

Each flow will have a folder with everything required to execute this flows, which means the **engine**, **code pieces** and **npms**

|

||||

|

||||

<Steps>

|

||||

<Step title="Prepare Code Pieces">

|

||||

If the code doesn't exist, it will be compiled using TypeScript Compiler (tsc) and the necessary npm packages will be prepared, if possible.

|

||||

</Step>

|

||||

<Step title="Install Pieces">

|

||||

Pieces are npm packages, we perform simple check If they don't exist we use `pnpm` to install the pieces.

|

||||

</Step>

|

||||

</Steps>

|

||||

|

||||

#### Execute Flow using Sandbox

|

||||

|

||||

In this mode, we use kernel namespaces to isolate everything (file system, memory, CPU). The folder prepared earlier will be bound as a **Read Only** Directory.

|

||||

|

||||

Then we use the command line and to spin up the isolation with new node process, something like that.

|

||||

```bash

|

||||

./isolate node path/to/flow.js --- rest of args

|

||||

```

|

||||

|

||||

#### Security

|

||||

|

||||

The flow execution is isolated in their own namespaces, which means pieces are isolated in different process and namespaces, So the user can run bash scripts and use the file system safely as It's limited and will be removed after the execution, in this mode the user can use any **NPM package** in their code piece.

|

||||

|

||||

#### Performance

|

||||

|

||||

This mode is **Slow** and **CPU Intensive**. The reason behind this is the **cold boot** of Node.js, since each flow execution will require a new **Node.js** process. The Node.js process consumes a lot of resources and takes some time to compile the code and start executing.

|

||||

|

||||

|

||||

#### Benchmark

|

||||

|

||||

|

||||

TBD

|

||||

@@ -0,0 +1,168 @@

|

||||

---

|

||||

title: "Breaking Changes"

|

||||

description: "This list shows all versions that include breaking changes and how to upgrade."

|

||||

icon: "hammer"

|

||||

---

|

||||

|

||||

## 0.74.0

|

||||

|

||||

### What has changed?

|

||||

- The default embedded database for development and lightweight deployments has changed from **SQLite3** to [**PGLite**](https://pglite.dev/) (embedded PostgreSQL).

|

||||

- The environment variable `AP_DB_TYPE=SQLITE3` is now deprecated and replaced with `AP_DB_TYPE=PGLITE`.

|

||||

- Existing SQLite databases will be automatically migrated to PGLite on first startup.

|

||||

- Templates are broken in this version. A migration issue changed template IDs, breaking API endpoints. This will be fixed in the next patch release.

|

||||

|

||||

### Do you need to take action?

|

||||

- **If you are using `AP_DB_TYPE=SQLITE3`:** Update your configuration to use `AP_DB_TYPE=PGLITE` instead.

|

||||

- **If you are using templates:** Wait for the next patch release to fix the template IDs.

|

||||

|

||||

|

||||

## 0.73.0

|

||||

|

||||

### What has changed?

|

||||

- Major change to MCP: [Read the announcement.](https://community.activepieces.com/t/mcp-update-easier-faster-and-more-secure/11177)

|

||||

- If you have SMTP configured in the platform admin, it's no longer supported—you need to use AP_SMTP_ [environment variables.](https://www.activepieces.com/docs/install/configuration/environment-variables#environment-variables)

|

||||

|

||||

### Do you need to take action?

|

||||

- If you are currently using MCP, review the linked announcement for important migration details and upgrade guidance.

|

||||

|

||||

|

||||

## 0.71.0

|

||||

|

||||

### What has changed?

|

||||

|

||||

- In separate workers setup, now they have access to Redis.

|

||||

- `AP_EXECUTION_MODE` mode `SANDBOXED` is now deprecated and replaced with `SANDBOX_PROCESS`

|

||||

- Code Copilot has been deprecated. It will be reintroduced in a different, more powerful form in the future.

|

||||

|

||||

### When is action necessary?

|

||||

|

||||

- If you have separate workers setup, you should make sure that workers have access to Redis.

|

||||

- If you are using `AP_EXECUTION_MODE` mode `SANDBOXED`, you should replace it with `SANDBOX_PROCESS`

|

||||

|

||||

## 0.70.0

|

||||

|

||||

### What has changed?

|

||||

- `AP_QUEUE_MODE` is now deprecated and replaced with `AP_REDIS_TYPE`

|

||||

- If you are using Sentinel Redis, you should add `AP_REDIS_TYPE` to `SENTINEL`

|

||||

|

||||

### When is action necessary?

|

||||

|

||||

- If you are using `AP_QUEUE_MODE`, you should replace it with `AP_REDIS_TYPE`

|

||||

- If you are using Sentinel Redis, you should add `AP_REDIS_TYPE` to `SENTINEL`

|

||||

|

||||

## 0.69.0

|

||||

|

||||

### What has changed?

|

||||

- `AP_FLOW_WORKER_CONCURRENCY` and `AP_SCHEDULED_WORKER_CONCURRENCY` are now deprecated all jobs have single queue and replaced with `AP_WORKER_CONCURRENCY`

|

||||

|

||||

### When is action necessary?

|

||||

|

||||

- If you are using `AP_FLOW_WORKER_CONCURRENCY` or `AP_SCHEDULED_WORKER_CONCURRENCY`, you should replace them with `AP_WORKER_CONCURRENCY`

|

||||

|

||||

## 0.66.0

|

||||

|

||||

### What has changed?

|

||||

|

||||

- If you use embedding the embedding SDK, please upgrade to version 0.6.0, `embedding.dashboard.hideSidebar` used to hide the navbar above the flows table in the dashboard now it relies on `embedding.dashboard.hideFlowsPageNavbar`

|

||||

|

||||

|

||||

## 0.64.0

|

||||

|

||||

### What has changed?

|

||||

|

||||

- MCP management is removed from the embedding SDK.

|

||||

|

||||

|

||||

## 0.63.0

|

||||

|

||||

### What has changed?

|

||||

|

||||

- Replicate provider's text models have been removed.

|

||||

|

||||

### When is action necessary?

|

||||

|

||||

- If you are using one of Replicate's text models, you should replace it with another model from another provider.

|

||||

|

||||

## 0.46.0

|

||||

|

||||

### What has changed?

|

||||

|

||||

- The UI for "Array of Properties" inputs in the pieces has been updated, particularly affecting the "Dynamic Value" toggle functionality.

|

||||

|

||||

### When is action necessary?

|

||||

|

||||

- No action is required for this change.

|

||||

- Your published flows will continue to work without interruption.

|

||||

- When editing existing flows that use the "Dynamic Value" toggle on "Array of Properties" inputs (such as the "files" parameter in the "Extract Structured Data" action of the "Utility AI" piece), the end user will need to remap the values again.

|

||||

- For details on the new UI implementation, refer to this [announcement](https://community.activepieces.com/t/inline-items/8964).

|

||||

|

||||

## 0.38.6

|

||||

|

||||

### What has changed?

|

||||

|

||||

- Workers no longer rely on the `AP_FLOW_WORKER_CONCURRENCY` and `AP_SCHEDULED_WORKER_CONCURRENCY` environment variables. These values are now retrieved from the app server.

|

||||

|

||||

### When is action necessary?

|

||||

|

||||

- If `AP_CONTAINER_TYPE` is set to `WORKER` on the worker machine, and `AP_SCHEDULED_WORKER_CONCURRENCY` or `AP_FLOW_WORKER_CONCURRENCY` are set to zero on the app server, workers will stop processing the queues. To fix this, check the [Separate Worker from App](https://www.activepieces.com/docs/install/configuration/separate-workers) documentation and set the `AP_CONTAINER_TYPE` to fetch the necessary values from the app server. If no container type is set on the worker machine, this is not a breaking change.

|

||||

|

||||

## 0.35.1

|

||||

|

||||

### What has changed?

|

||||

|

||||

- The 'name' attribute has been renamed to 'externalId' in the `AppConnection` entity.

|

||||

- The 'displayName' attribute has been added to the `AppConnection` entity.

|

||||

|

||||

### When is action necessary?

|

||||

- If you are using the connections API, you should update the `name` attribute to `externalId` and add the `displayName` attribute.

|

||||

|

||||

## 0.35.0

|

||||

|

||||

### What has changed?

|

||||

|

||||

- All branches are now converted to routers, and downgrade is not supported.

|

||||

|

||||

## 0.33.0

|

||||

|

||||

### What has changed?

|

||||

|

||||

- Files from actions or triggers are now stored in the database / S3 to support retries from certain steps, and the size of files from actions is now subject to the limit of `AP_MAX_FILE_SIZE_MB`.

|

||||

- Files in triggers were previously passed as base64 encoded strings; now they are passed as file paths in the database / S3. Paused flows that have triggers from version 0.29.0 or earlier will no longer work.

|

||||

|

||||

### When is action necessary?

|

||||

- If you are dealing with large files in the actions, consider increasing the `AP_MAX_FILE_SIZE_MB` to a higher value, and make sure the storage system (database/S3) has enough capacity for the files.

|

||||

|

||||

|

||||

## 0.30.0

|

||||

|

||||

### What has changed?

|

||||

|

||||

- `AP_SANDBOX_RUN_TIME_SECONDS` is now deprecated and replaced with `AP_FLOW_TIMEOUT_SECONDS`

|

||||

- `AP_CODE_SANDBOX_TYPE` is now deprecated and replaced with new mode in `AP_EXECUTION_MODE`

|

||||

|

||||

### When is action necessary?

|

||||

|

||||

- If you are using `AP_CODE_SANDBOX_TYPE` to `V8_ISOLATE`, you should switch to `AP_EXECUTION_MODE` to `SANDBOX_CODE_ONLY`

|

||||

- If you are using `AP_SANDBOX_RUN_TIME_SECONDS` to set the sandbox run time limit, you should switch to `AP_FLOW_TIMEOUT_SECONDS`

|

||||

|

||||

## 0.28.0

|

||||

|

||||

### What has changed?

|

||||

|

||||

- **Project Members:**

|

||||

- The `EXTERNAL_CUSTOMER` role has been deprecated and replaced with the `OPERATOR` role. Please check the permissions page for more details.

|

||||

- All pending invitations will be removed.

|

||||

- The User Invitation entity has been introduced to send invitations. You can still use the Project Member API to add roles for the user, but it requires the user to exist. If you want to send an email, use the User Invitation, and later a record in the project member will be created after the user accepts and registers an account.

|

||||

- **Authentication:**

|

||||

- The `SIGN_UP_ENABLED` environment variable, which allowed multiple users to sign up for different platforms/projects, has been removed. It has been replaced with inviting users to the same platform/project. All old users should continue to work normally.

|

||||

|

||||

### When is action necessary?

|

||||

|

||||

- **Project Members:**

|

||||

|

||||

If you use the embedding SDK or the create project member API with the `EXTERNAL_CUSTOMER` role, you should start using the `OPERATOR` role instead.

|

||||

|

||||

- **Authentication:**

|

||||

|

||||

Multiple platforms/projects are no longer supported in the community edition. Technically, everything is still there, but you have to hack using the API as the authentication system has now changed. If you have already created the users/platforms, they should continue to work, and no action is required.

|

||||

@@ -0,0 +1,136 @@

|

||||

---

|

||||

title: 'Environment Variables'

|

||||

description: ''

|

||||

icon: 'gear'

|

||||

---

|

||||

|

||||

To configure activepieces, you will need to set some environment variables, There is file called `.env` at the root directory for our main repo.

|

||||

|

||||

<Tip> When you execute the [tools/deploy.sh](https://github.com/activepieces/activepieces/blob/main/tools/deploy.sh) script in the Docker installation tutorial,

|

||||

it will produce these values. </Tip>

|

||||

|

||||

## Environment Variables

|

||||

|

||||

| Variable | Description | Default Value | Example |

|

||||

| ---------------------------------- | -------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- | ------------------------------------------------------------------------------ | ---------------------------------------------------------------------- |

|

||||

| `AP_CONFIG_PATH` | Optional parameter for specifying the path to store PGLite database and local settings. | `~/.activepieces` | |

|

||||

| `AP_CLOUD_AUTH_ENABLED` | Turn off the utilization of Activepieces oauth2 applications | `false` | |

|

||||

| `AP_DB_TYPE` | The type of database to use. `POSTGRES` for external PostgreSQL, `PGLITE` for embedded database. **Note:** `SQLITE3` is deprecated and will be automatically migrated to `PGLITE`. | `POSTGRES` | |

|

||||

| `AP_EXECUTION_MODE` | You can choose between 'SANDBOX_PROCESS', 'UNSANDBOXED', 'SANDBOX_CODE_ONLY', 'SANDBOX_CODE_AND_PROCESS' as possible values. If you decide to change this, make sure to carefully read https://www.activepieces.com/docs/install/architecture/workers | `UNSANDBOXED` | |

|

||||

| `AP_WORKER_CONCURRENCY` | The number of different scheduled worker jobs can be processed in same time | `10` |

|

||||

| `AP_AGENTS_WORKER_CONCURRENCY` | The number of different agents can be processed in same time | `10` |

|

||||

| `AP_ENCRYPTION_KEY` | ❗️ Encryption key used for connections is a 32-character (16 bytes) hexadecimal key. You can generate one using the following command: `openssl rand -hex 16`. | None |

|

||||

| `AP_EXECUTION_DATA_RETENTION_DAYS` | The number of days to retain execution data, logs and events. | `30` | |

|

||||

| `AP_FRONTEND_URL` | ❗️ Url that will be used to specify redirect url and webhook url.

|

||||

| `AP_INTERNAL_URL` | (BETA) Used to specify the SSO authentication URL. | None | [https://demo.activepieces.com/api](https://demo.activepieces.com/api) |

|

||||

| `AP_JWT_SECRET` | ❗️ Encryption key used for generating JWT tokens is a 32-character hexadecimal key. You can generate one using the following command: `openssl rand -hex 32`. | None | [https://demo.activepieces.com](https://demo.activepieces.com) |

|

||||

| `AP_QUEUE_UI_ENABLED` | Enable the queue UI (only works with redis) | `true` | |

|

||||

| `AP_QUEUE_UI_USERNAME` | The username for the queue UI. This is required if `AP_QUEUE_UI_ENABLED` is set to `true`. | None | |

|

||||

| `AP_QUEUE_UI_PASSWORD` | The password for the queue UI. This is required if `AP_QUEUE_UI_ENABLED` is set to `true`. | None | |

|

||||

| `AP_REDIS_FAILED_JOB_RETENTION_DAYS` | The number of days to retain failed jobs in Redis. | `30` | |

|

||||

| `AP_REDIS_FAILED_JOB_RETENTION_MAX_COUNT` | The maximum number of failed jobs to retain in Redis. | `2000` | |

|

||||

| `AP_TRIGGER_DEFAULT_POLL_INTERVAL` | How many minutes before the system checks for new data updates for pieces with scheduled triggers, such as new Google Contacts. | `5` | |

|

||||

| `AP_PIECES_SOURCE` | `AP_PIECES_SOURCE`: `FILE` for local development, `DB` for database. You can find more information about it in [Setting Piece Source](#setting-piece-source) section. | `CLOUD_AND_DB` | |

|

||||

| `AP_PIECES_SYNC_MODE` | `AP_PIECES_SYNC_MODE`: None for no metadata syncing / 'OFFICIAL_AUTO' for automatic syncing for pieces metadata from cloud | `OFFICIAL_AUTO` |

|

||||

| `AP_POSTGRES_DATABASE` | ❗️ The name of the PostgreSQL database | None | |

|

||||

| `AP_POSTGRES_HOST` | ❗️ The hostname or IP address of the PostgreSQL server | None | |

|

||||

| `AP_POSTGRES_PASSWORD` | ❗️ The password for the PostgreSQL, you can generate a 32-character hexadecimal key using the following command: `openssl rand -hex 32`. | None | |

|

||||

| `AP_POSTGRES_PORT` | ❗️ The port number for the PostgreSQL server | None | |

|

||||

| `AP_POSTGRES_USERNAME` | ❗️ The username for the PostgreSQL user | None | |

|

||||

| `AP_POSTGRES_USE_SSL` | Use SSL to connect the postgres database | `false` | |

|

||||

| `AP_POSTGRES_SSL_CA` | Use SSL Certificate to connect to the postgres database |

|

||||

| `AP_POSTGRES_URL` | Alternatively, you can specify only the connection string (e.g postgres://user:password@host:5432/database) instead of providing the database, host, port, username, and password. | None | |

|

||||

| `AP_POSTGRES_POOL_SIZE` | Maximum number of clients the pool should contain for the PostgreSQL database | None

|

||||

| `AP_POSTGRES_IDLE_TIMEOUT_MS` | Sets the idle timout pool for your PostgreSQL | `30000`

|

||||

| `AP_REDIS_TYPE` | Where to spin redis instance, either in memory (MEMORY) or in a dedicated instance (STANDALONE), or in a sentinel instance (SENTINEL) | `STANDALONE` | |

|

||||

| `AP_REDIS_URL` | If a Redis connection URL is specified, all other Redis properties will be ignored. | None | |

|

||||

| `AP_REDIS_USER` | ❗️ Username to use when connect to redis | None | |

|

||||

| `AP_REDIS_PASSWORD` | ❗️ Password to use when connect to redis | None | |

|

||||

| `AP_REDIS_HOST` | ❗️ The hostname or IP address of the Redis server | None | |

|

||||

| `AP_REDIS_PORT` | ❗️ The port number for the Redis server | None | |

|

||||

| `AP_REDIS_DB` | The Redis database index to use | `0` | |

|

||||

| `AP_REDIS_USE_SSL` | Connect to Redis with SSL | `false` | |

|

||||

| `AP_REDIS_SSL_CA_FILE` | The path to the CA file for the Redis server. | None | |

|

||||

| `AP_REDIS_SENTINEL_HOSTS` | If specified, this should be a comma-separated list of `host:port` pairs for Redis Sentinels. Make sure to set `AP_REDIS_CONNECTION_MODE` to `SENTINEL` | None | `sentinel-host-1:26379,sentinel-host-2:26379,sentinel-host-3:26379` |

|

||||

| `AP_REDIS_SENTINEL_NAME` | The name of the master node monitored by the sentinels. | None | `sentinel-host-1` |

|

||||

| `AP_REDIS_SENTINEL_ROLE` | The role to connect to, either `master` or `slave`. | None | `master` |

|

||||

| `AP_TRIGGER_TIMEOUT_SECONDS` | Maximum allowed runtime for a trigger to perform polling in seconds | `60` | |

|

||||

| `AP_FLOW_TIMEOUT_SECONDS` | Maximum allowed runtime for a flow to run in seconds | `600` | |

|

||||

| `AP_AGENT_TIMEOUT_SECONDS` | Maximum allowed runtime for an agent to run in seconds | `600` | |

|

||||

| `AP_SANDBOX_MEMORY_LIMIT` | The maximum amount of memory (in kilobytes) that a single sandboxed worker process can use. This helps prevent runaway memory usage in custom code or pieces. If not set, the default is 1048576 KB (1024 MB). | `1048576` | `1048576` |

|

||||

| `AP_SANDBOX_PROPAGATED_ENV_VARS` | Environment variables that will be propagated to the sandboxed code. If you are using it for pieces, we strongly suggests keeping everything in the authentication object to make sure it works across AP instances. | None | |

|

||||

| `AP_TELEMETRY_ENABLED` | Collect telemetry information. | `true` | |

|

||||

| `AP_TEMPLATES_SOURCE_URL` | This is the endpoint we query for templates, remove it and templates will be removed from UI | `https://cloud.activepieces.com/api/v1/templates` | |

|

||||

| `AP_WEBHOOK_TIMEOUT_SECONDS` | The default timeout for webhooks. The maximum allowed is 15 minutes. Please note that Cloudflare limits it to 30 seconds. If you are using a reverse proxy for SSL, make sure it's configured correctly. | `30` | |

|

||||

| `AP_TRIGGER_FAILURE_THRESHOLD` | The maximum number of consecutive trigger failures is 576 by default, which is equivalent to approximately 2 days. | `30` |

|

||||

| `AP_PROJECT_RATE_LIMITER_ENABLED` | Enforce rate limits and prevent excessive usage by a single project. | `true` | |

|

||||

| `AP_MAX_CONCURRENT_JOBS_PER_PROJECT`| The maximum number of active runs a project can have. This is used to enforce rate limits and prevent excessive usage by a single project. | `100` | |

|

||||

| `AP_S3_ACCESS_KEY_ID` | The access key ID for your S3-compatible storage service. Not required if `AP_S3_USE_IRSA` is `true`. | None | |

|

||||

| `AP_S3_SECRET_ACCESS_KEY` | The secret access key for your S3-compatible storage service. Not required if `AP_S3_USE_IRSA` is `true`. | None | |

|

||||

| `AP_S3_BUCKET` | The name of the S3 bucket to use for file storage. | None | |

|

||||

| `AP_S3_ENDPOINT` | The endpoint URL for your S3-compatible storage service. Not required if `AWS_ENDPOINT_URL` is set. | None | `https://s3.amazonaws.com` |

|

||||

| `AP_S3_REGION` | The region where your S3 bucket is located. Not required if `AWS_REGION` is set. | None | `us-east-1` |

|

||||

| `AP_S3_USE_SIGNED_URLS` | It is used to route traffic to S3 directly. It should be enabled if the S3 bucket is public. | None | |

|

||||

| `AP_S3_USE_IRSA` | Use IAM Role for Service Accounts (IRSA) to connect to S3. When `true`, `AP_S3_ACCESS_KEY_ID` and `AP_S3_ACCESS_KEY_ID` are not required. | None | `true` |

|

||||

| `AP_SMTP_HOST` | The host name for the SMTP server that activepieces uses to send emails | `None` | `mail.example.com` |

|

||||

| `AP_SMTP_PORT` | The port number for the SMTP server that activepieces uses to send emails | `None` | 587 |

|

||||

| `AP_SMTP_USERNAME` | The user name for the SMTP server that activepieces uses to send emails | `None` | test@mail.example.com |

|

||||

| `AP_SMTP_PASSWORD` | The password for the SMTP server that activepieces uses to send emails | `None` | secret1234 |

|

||||

| `AP_SMTP_SENDER_EMAIL` | The email address from which activepieces sends emails. | `None` | test@mail.example.com |

|

||||

| `AP_SMTP_SENDER_NAME` | The sender name activepieces uses to send emails.

|

||||

| `AP_MAX_FILE_SIZE_MB` | The maximum allowed file size in megabytes for uploads including logs of flow runs. If logs exceed this size, they will be truncated which may cause flow execution issues. | `10` | `10` |

|

||||

| `AP_FILE_STORAGE_LOCATION` | The location to store files. Possible values are `DB` for storing files in the database or `S3` for storing files in an S3-compatible storage service. | `DB` | |

|

||||

| `AP_PAUSED_FLOW_TIMEOUT_DAYS` | The maximum allowed pause duration in days for a paused flow, please note it can not exceed `AP_EXECUTION_DATA_RETENTION_DAYS` | `30` |

|

||||

| `AP_MAX_RECORDS_PER_TABLE` | The maximum allowed number of records per table | `1500` | `1500`

|

||||

| `AP_MAX_FIELDS_PER_TABLE` | The maximum allowed number of fields per table | `15` | `15`

|

||||

| `AP_MAX_TABLES_PER_PROJECT` | The maximum allowed number of tables per project | `20` | `20`

|

||||

| `AP_MAX_MCPS_PER_PROJECT` | The maximum allowed number of mcp per project | `20` | `20`

|

||||

| `AP_ENABLE_FLOW_ON_PUBLISH` | Whether publishing a new flow version should automatically enable the flow | `true` | `false`

|

||||

| `AP_ISSUE_ARCHIVE_DAYS` | Controls the automatic archival of issues in the system. Issues that have not been updated for this many days will be automatically moved to an archived state.| `14` | `1`

|

||||

| `AP_APP_TITLE` | Initial title shown in the browser tab while loading the app | `Activepieces` | `Activepieces`

|

||||

| `AP_FAVICON_URL` | Initial favicon shown in the browser tab while loading the app | `https://cdn.activepieces.com/brand/favicon.ico` | `https://cdn.activepieces.com/brand/favicon.ico`

|

||||

|

||||

<Warning>

|

||||

The frontend URL is essential for webhooks and app triggers to work. It must

|

||||

be accessible to third parties to send data.

|

||||

</Warning>

|

||||

|

||||

|

||||

### Setting Webhook (Frontend URL):

|

||||

|

||||

The default URL is set to the machine's IP address. To ensure proper operation, ensure that this address is accessible or specify an `AP_FRONTEND_URL` environment variable.

|

||||

|

||||

One possible solution for this is using a service like ngrok ([https://ngrok.com/](https://ngrok.com/)), which can be used to expose the frontend port (4200) to the internet.

|

||||

|

||||

### Redis Configuration

|

||||

|

||||

Set the `AP_REDIS_URL` environment variable to the connection URL of your Redis server.

|

||||

|

||||

Please note that if a Redis connection URL is specified, all other **Redis properties** will be ignored.

|

||||

|

||||

<Info>

|

||||

If you don't have the Redis URL, you can use the following command to get it. You can use the following variables:

|

||||

|

||||

- `REDIS_USER`: The username to use when connecting to Redis.

|

||||

- `REDIS_PASSWORD`: The password to use when connecting to Redis.

|

||||

- `REDIS_HOST`: The hostname or IP address of the Redis server.

|

||||

- `REDIS_PORT`: The port number for the Redis server.

|

||||

- `REDIS_DB`: The Redis database index to use.

|

||||

- `REDIS_USE_SSL`: Connect to Redis with SSL.

|

||||

</Info>

|

||||

<Info>

|

||||

If you are using **Redis Sentinel**, you can set the following environment variables:

|

||||

- `AP_REDIS_TYPE`: Set this to `SENTINEL`.

|

||||

- `AP_REDIS_SENTINEL_HOSTS`: A comma-separated list of `host:port` pairs for Redis Sentinels. When set, all other Redis properties will be ignored.

|

||||

- `AP_REDIS_SENTINEL_NAME`: The name of the master node monitored by the sentinels.

|

||||

- `AP_REDIS_SENTINEL_ROLE`: The role to connect to, either `master` or `slave`.

|

||||

- `AP_REDIS_PASSWORD`: The password to use when connecting to Redis.

|

||||

- `AP_REDIS_USE_SSL`: Connect to Redis with SSL.

|

||||

- `AP_REDIS_SSL_CA_FILE`: The path to the CA file for the Redis server.

|

||||

</Info>

|

||||

|

||||

### SMTP Configuration

|

||||

|

||||

SMTP can be configured both from the platform admin screen and through environment variables. The enviroment variables are only used if the platform admin screen has no email configuration entered.

|

||||

|

||||

Activepieces will only use the configuration from the environment variables if `AP_SMTP_HOST`, `AP_SMTP_PORT`, `AP_SMTP_USERNAME` and `AP_SMTP_PASSWORD` all have a value set. TLS is supported.

|

||||

52

activepieces-fork/docs/install/configuration/hardware.mdx

Normal file

52

activepieces-fork/docs/install/configuration/hardware.mdx

Normal file

@@ -0,0 +1,52 @@

|

||||

---

|

||||

title: "Hardware Requirements"

|

||||

icon: "server"

|

||||

description: "Specifications for hosting Activepieces"

|

||||

---

|

||||

|

||||

More information about architecture please visit our [architecture](../architecture/overview) page.

|

||||

|

||||

### Technical Specifications

|

||||

|

||||

Activepieces is designed to be memory-intensive rather than CPU-intensive. A modest instance will suffice for most scenarios, but requirements can vary based on specific use cases.

|

||||

|

||||

| Component | Memory (RAM) | CPU Cores | Disk Space | Notes |

|

||||

| ------------- | ------------ | --------- | ---------- | ----- |

|

||||

| PostgreSQL | 1 GB | 1 | - | |

|

||||

| Redis | 1 GB | 1 | - | |

|

||||

| Activepieces | 4 GB | 1 | 30 GB | |

|

||||

|

||||

<Tip>

|

||||

The above recommendations are designed to meet the needs of the majority of use cases.

|

||||

</Tip>

|

||||

|

||||

## Scaling Factors

|

||||

|

||||

### Redis

|

||||

|

||||

Redis requires minimal scaling as it primarily stores jobs during processing. Activepieces leverages BullMQ, capable of handling a substantial number of jobs per second.

|

||||

|

||||

### PostgreSQL

|

||||

|

||||

<Tip>

|

||||

**Scaling Tip:** Since files are stored in the database, you can alleviate the load by configuring S3 storage for file management.

|

||||

</Tip>

|

||||

|

||||

PostgreSQL is typically not the system's bottleneck.

|

||||

|

||||

### Activepieces Container

|

||||

|

||||

<Tip>

|

||||

**Scaling Tip:** The Activepieces container is stateless, allowing for seamless horizontal scaling.

|

||||

</Tip>

|

||||

|

||||

- `FLOW_WORKER_CONCURRENCY` and `SCHEDULED_WORKER_CONCURRENCY` dictate the number of concurrent jobs processed for flows and scheduled flows, respectively. By default, these are set to 20 and 10.

|

||||

|

||||

## Expected Performance

|

||||

|

||||

Activepieces ensures no request is lost; all requests are queued. In the event of a spike, requests will be processed later, which is acceptable as most flows are asynchronous, with synchronous flows being prioritized.

|

||||

|

||||

It's hard to predict exact performance because flows can be very different. But running a flow doesn't slow things down, as it runs as fast as regular JavaScript.

|

||||

(Note: This applies to `SANDBOX_CODE_ONLY` and `UNSANDBOXED` execution modes, which are recommended and used in self-hosted setups.)

|

||||

|

||||

You can anticipate handling over **20 million executions** monthly with this setup.

|

||||

65

activepieces-fork/docs/install/configuration/overview.mdx

Normal file

65

activepieces-fork/docs/install/configuration/overview.mdx

Normal file

@@ -0,0 +1,65 @@

|

||||

---

|

||||

title: "Deployment Checklist"

|

||||

description: "Checklist to follow after deploying Activepieces"

|

||||

icon: "list"

|

||||

---

|

||||

|

||||

<Info>

|

||||

This tutorial assumes you have already followed the quick start guide using one of the installation methods listed in [Install Overview](../overview).

|

||||

</Info>

|

||||

|

||||

In this section, we will go through the checklist after using one of the installation methods and ensure that your deployment is production-ready.

|

||||

|

||||

<AccordionGroup>

|

||||

<Accordion title="Decide on Sandboxing" icon="code">

|

||||

|

||||

You should decide on the sandboxing mode for your deployment based on your use case and whether it is multi-tenant or not. Here is a simplified way to decide:

|

||||

|

||||

<Tip>

|

||||

**Friendly Tip #1**: For multi-tenant setups, use V8/Code Sandboxing.

|

||||

|

||||

It is secure and does not require privileged Docker access in Kubernetes.

|

||||

Privileged Docker is usually not allowed to prevent root escalation threats.

|

||||

</Tip>

|

||||

|

||||

<Tip>

|

||||

**Friendly Tip #2**: For single-tenant setups, use No Sandboxing. It is faster and does not require privileged Docker access.

|

||||

</Tip>

|

||||

|

||||

<Snippet file="execution-mode.mdx" />

|

||||

|

||||

More Information at [Sandboxing & Workers](../architecture/workers#sandboxing)

|

||||

</Accordion>

|

||||

<Accordion title="Enterprise Edition (Optional)" icon="building">

|

||||

<Tip>

|

||||

For licensing inquiries regarding the self-hosted enterprise edition, please reach out to `sales@activepieces.com`, as the code and Docker image are not covered by the MIT license.

|

||||

</Tip>

|

||||

|

||||

<Note>You can request a trial key from within the app or in the cloud by filling out the form. Alternatively, you can contact sales at [https://www.activepieces.com/sales](https://www.activepieces.com/sales).<br></br>Please know that when your trial runs out, all enterprise [features](https://www.activepieces.com/pricing) will be shut down meaning any user other than the platform admin will be deactivated, and your private pieces will be deleted, which could result in flows using them to fail.</Note>

|

||||

|

||||

<Warning>

|

||||

Before version 0.73.0, you cannot switch from CE to EE directly We suggest upgrading to 0.73.0 with the same edition first, then switch `AP_EDITION`.

|

||||

</Warning>

|

||||

|

||||

<Warning>

|

||||

Enterprise edition must use `PostgreSQL` as the database backend and `Redis` as the Queue System.

|

||||

</Warning>

|

||||

|

||||

## Installation

|

||||

|

||||

1. Set the `AP_EDITION` environment variable to `ee`.

|

||||

2. Set the `AP_EXECUTION_MODE` to anything other than `UNSANDBOXED`, check the above section.

|

||||

3. Once your instance is up, activate the license key by going to **Platform Admin -> Setup -> License Keys**.

|

||||

|

||||

|

||||

|

||||

</Accordion>

|

||||

<Accordion title="Setup HTTPS" icon="lock">

|

||||

Setting up HTTPS is highly recommended because many services require webhook URLs to be secure (HTTPS). This helps prevent potential errors.

|

||||

|

||||

To set up SSL, you can use any reverse proxy. For a step-by-step guide, check out our example using [Nginx](../guides/setup-ssl).

|

||||

</Accordion>

|

||||

<Accordion title="Troubleshooting (Optional)" icon="wrench">

|

||||

If you encounter any issues, check out our [Troubleshooting](../troubleshooting/websocket-issues) guide.

|

||||

</Accordion>

|

||||

</AccordionGroup>

|

||||

25

activepieces-fork/docs/install/configuration/telemetry.mdx

Normal file

25

activepieces-fork/docs/install/configuration/telemetry.mdx

Normal file

@@ -0,0 +1,25 @@

|

||||

---

|

||||

title: "Telemetry"

|

||||

description: ""

|

||||

icon: 'calculator'

|

||||

---

|

||||

|

||||

# Why Does Activepieces need data?

|

||||

|

||||

As a self-hosted product, gathering usage metrics and insights can be difficult for us. However, these analytics are essential in helping us understand key behaviors and delivering a higher quality experience that meets your needs.

|

||||

|

||||

To ensure we can continue to improve our product, we have decided to track certain basic behaviors and metrics that are vital for understanding the usage of Activepieces.

|

||||

|

||||

We have implemented a minimal tracking plan and provide a detailed list of the metrics collected in a separate section.

|

||||

|

||||

|

||||

# What Does Activepieces Collect?

|

||||

|

||||

We value transparency in data collection and assure you that we do not collect any personal information. The following events are currently being collected:

|

||||

|

||||

[Exact Code](https://github.com/activepieces/activepieces/blob/main/packages/shared/src/lib/common/telemetry.ts)

|

||||

|

||||

|

||||

# Opting out?

|

||||

|

||||

To opt out, set the environment variable `AP_TELEMETRY_ENABLED=false`

|

||||

48

activepieces-fork/docs/install/guides/separate-workers.mdx

Normal file

48

activepieces-fork/docs/install/guides/separate-workers.mdx

Normal file

@@ -0,0 +1,48 @@

|

||||

---

|

||||

title: 'How to Separate Workers'

|

||||

description: ''

|

||||

icon: 'robot'

|

||||

---

|

||||

|

||||

Benefits of separating workers from the main application (APP):

|

||||

|

||||

- **Availability**: The application remains lightweight, allowing workers to be scaled independently.

|

||||

- **Security**: Workers lack direct access to Redis and the database, minimizing impact in case of a security breach.

|

||||

|

||||

|

||||

<Steps>

|

||||

<Step title="Create Worker Token">

|

||||

To create a worker token, use the local CLI command to generate the JWT and sign it with your `AP_JWT_SECRET` used for the app server. Follow these steps:

|

||||

1. Open your terminal and navigate to the root of the repository.

|

||||

2. Run the command: `npm run workers token`.

|

||||

3. When prompted, enter the JWT secret (this should be the same as the `AP_JWT_SECRET` used for the app server).

|

||||

4. The generated token will be displayed in your terminal, copy it and use it in the next step.

|

||||

|

||||

</Step>

|

||||

<Step title="Configure Environment Variables">

|

||||

Define the following environment variables in the `.env` file on the worker machine:

|

||||

- Set `AP_CONTAINER_TYPE` to `WORKER`

|

||||

- Specify `AP_FRONTEND_URL`

|

||||

- Provide `AP_WORKER_TOKEN`

|

||||

</Step>

|

||||

<Step title="Configure Persistent Volume">

|

||||

Configure a persistent volume for the worker to cache flows and pieces. This is important as first uncached execution of pieces and flows are very slow. Having a persistent volume significantly improves execution speed.

|

||||

|

||||

Add the following volume mapping to your docker configuration:

|

||||

```yaml

|

||||

volumes:

|

||||

- <your path>:/usr/src/app/cache

|

||||

```

|

||||

Note: This setup works whether you attach one volume per worker, It cannot be shared across multiple workers.

|

||||

</Step>

|

||||

<Step title="Launch Worker Machine">

|

||||

Launch the worker machine and supply it with the generated token.

|

||||

</Step>

|

||||

<Step title="Verify Worker Operation">

|

||||

Verify that the workers are visible in the Platform Admin Console under Infra -> Workers.

|

||||

|

||||

</Step>

|

||||

<Step title="Configure App Container Type">

|

||||

On the APP machine, set `AP_CONTAINER_TYPE` to `APP`.

|

||||

</Step>

|

||||

</Steps>

|

||||

33

activepieces-fork/docs/install/guides/setup-app-webhooks.mdx

Normal file

33

activepieces-fork/docs/install/guides/setup-app-webhooks.mdx

Normal file

@@ -0,0 +1,33 @@

|

||||

---

|

||||

title: "How to Setup App Webhooks"

|

||||

description: ""

|

||||

icon: 'webhook'

|

||||

---

|

||||

|

||||

Certain apps like Slack and Square only support one webhook per OAuth2 app. This means that manual configuration is required in their developer portal, and it cannot be automated.

|

||||

|

||||

## Slack

|

||||

|

||||

**Configure Webhook Secret**

|

||||

|

||||

1. Visit the "Basic Information" section of your Slack OAuth settings.

|

||||

2. Copy the "Signing Secret" and save it.

|

||||

3. Set the following environment variable in your activepieces environment:

|

||||

```

|

||||

AP_APP_WEBHOOK_SECRETS={"@activepieces/piece-slack": {"webhookSecret": "SIGNING_SECRET"}}

|

||||

```

|

||||

4. Restart your application instance.

|

||||

|

||||

|

||||

**Configure Webhook URL**

|

||||

|

||||

1. Go to the "Event Subscription" settings in the Slack OAuth2 developer platform.

|

||||

2. The URL format should be: `https://YOUR_AP_INSTANCE/api/v1/app-events/slack`.

|

||||

3. When connecting to Slack, use your OAuth2 credentials or update the OAuth2 app details from the admin console (in platform plans).

|

||||

4. Add the following events to the app:

|

||||

- `message.channels`

|

||||

- `reaction_added`

|

||||

- `message.im`

|

||||

- `message.groups`

|

||||

- `message.mpim`

|

||||

- `app_mention`

|

||||

@@ -0,0 +1,19 @@

|

||||

---

|

||||

title: "How to Setup OpenTelemetry"

|

||||

description: "Configure OpenTelemetry for observability and tracing"

|

||||

icon: "chart-line"

|

||||

---

|

||||

|

||||

Activepieces supports both standard OpenTelemetry environment variables and vendor-specific configuration for observability and tracing.

|

||||

|

||||

## Environment Variables

|

||||

|

||||

| Variable | Description | Default Value | Example |

|

||||

|----------|-------------|---------------|---------|

|

||||

| `AP_OTEL_ENABLED` | Enable OpenTelemetry tracing | `false` | `true` |

|

||||

| `OTEL_EXPORTER_OTLP_ENDPOINT` | OTLP exporter endpoint URL | None | `https://your-collector:4317/v1/traces` |

|

||||

| `OTEL_EXPORTER_OTLP_HEADERS` | Headers for OTLP exporter (comma-separated key=value pairs) | None | `Authorization=Bearer token` |

|

||||

|

||||

<Note>

|

||||

Both `AP_OTEL_ENABLED` and `OTEL_EXPORTER_OTLP_ENDPOINT` must be set for OpenTelemetry to be enabled.

|

||||

</Note>

|

||||

27

activepieces-fork/docs/install/guides/setup-s3.mdx

Normal file

27

activepieces-fork/docs/install/guides/setup-s3.mdx

Normal file

@@ -0,0 +1,27 @@

|

||||

---

|

||||

title: "How to Setup S3"

|

||||

description: "Configure S3-compatible storage for files and run logs"

|

||||

icon: "cloud"

|

||||

---

|

||||

|

||||

Run logs and files are stored in the database by default, but you can switch to S3 later without any migration; for most cases, the database is enough.

|

||||

|

||||

It's recommended to start with the database and switch to S3 if needed. After switching, expired files in the database will be deleted, and everything will be stored in S3. No manual migration is needed.

|

||||

|

||||

## Environment Variables

|

||||

|

||||

| Variable | Description | Default Value | Example |

|

||||

|----------|-------------|---------------|---------|

|

||||

| `AP_FILE_STORAGE_LOCATION` | The location to store files. Set to `S3` for S3 storage. | `DB` | `S3` |

|

||||

| `AP_S3_ACCESS_KEY_ID` | The access key ID for your S3-compatible storage service. Not required if `AP_S3_USE_IRSA` is `true`. | None | |

|

||||

| `AP_S3_SECRET_ACCESS_KEY` | The secret access key for your S3-compatible storage service. Not required if `AP_S3_USE_IRSA` is `true`. | None | |

|

||||

| `AP_S3_BUCKET` | The name of the S3 bucket to use for file storage. | None | |

|

||||

| `AP_S3_ENDPOINT` | The endpoint URL for your S3-compatible storage service. Not required if `AWS_ENDPOINT_URL` is set. | None | `https://s3.amazonaws.com` |

|

||||

| `AP_S3_REGION` | The region where your S3 bucket is located. Not required if `AWS_REGION` is set. | None | `us-east-1` |

|

||||

| `AP_S3_USE_SIGNED_URLS` | It is used to route traffic to S3 directly. It should be enabled if the S3 bucket is public. | None | `true` |

|

||||

| `AP_S3_USE_IRSA` | Use IAM Role for Service Accounts (IRSA) to connect to S3. When `true`, `AP_S3_ACCESS_KEY_ID` and `AP_S3_SECRET_ACCESS_KEY` are not required. | None | `true` |

|

||||

| `AP_MAX_FILE_SIZE_MB` | The maximum allowed file size in megabytes for uploads including logs of flow runs. | `10` | `10` |

|

||||

|

||||

<Tip>

|

||||

**Friendly Tip #1**: If the S3 bucket supports signed URLs but needs to be accessible over a public network, you can set `AP_S3_USE_SIGNED_URLS` to `true` to route traffic directly to S3 and reduce heavy traffic on your API server.

|

||||

</Tip>

|

||||

73

activepieces-fork/docs/install/guides/setup-ssl.mdx

Normal file

73

activepieces-fork/docs/install/guides/setup-ssl.mdx

Normal file

@@ -0,0 +1,73 @@

|

||||

---

|

||||

title: "Setup HTTPS"

|

||||

description: ""

|

||||

icon: "shield"

|

||||

---

|

||||

|

||||

To enable SSL, you can use a reverse proxy. In this case, we will use Nginx as the reverse proxy.

|

||||

|

||||

## Install Nginx

|

||||

|

||||

```bash

|

||||

sudo apt-get install nginx

|

||||

```

|

||||

|

||||

|

||||

## Create Certificate

|

||||

|

||||

To proceed with this documentation, it is assumed that you already have a certificate for your domain.

|

||||

|

||||

<Tip>

|

||||

You have the option to use Cloudflare or generate a certificate using Let's Encrypt or Certbot.

|

||||

</Tip>

|

||||

|

||||

|

||||

Add the certificate to the following paths: `/etc/key.pem` and `/etc/cert.pem`

|

||||

|

||||

|

||||

## Setup Nginx

|

||||

|

||||

```bash

|

||||

sudo nano /etc/nginx/sites-available/default

|

||||

```

|

||||

|

||||

|

||||

```bash

|

||||

server {

|

||||

listen 80;

|

||||

listen [::]:80;

|

||||

|

||||

server_name example.com www.example.com;

|

||||

|

||||

return 301 https://$server_name$request_uri;

|

||||

}

|

||||

|

||||

server {

|

||||

listen 443 ssl http2;

|

||||

listen [::]:443 ssl http2;

|

||||

|

||||

server_name example.com www.example.com;

|

||||

|

||||

ssl_certificate /etc/cert.pem;

|

||||

ssl_certificate_key /etc/key.pem;

|

||||

|

||||

location / {

|

||||

proxy_pass http://localhost:8080;

|

||||

proxy_http_version 1.1;

|

||||

proxy_set_header Upgrade $http_upgrade;

|

||||

proxy_set_header Connection 'upgrade';

|

||||

proxy_set_header Host $host;

|

||||

proxy_cache_bypass $http_upgrade;

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

## Restart Nginx

|

||||

|

||||

```bash

|

||||

sudo systemctl restart nginx

|

||||

```

|

||||

|

||||

## Test

|

||||

|

||||

Visit your domain and you should see your application running with SSL.

|

||||

139

activepieces-fork/docs/install/options/aws.mdx

Normal file

139

activepieces-fork/docs/install/options/aws.mdx

Normal file

@@ -0,0 +1,139 @@

|

||||

---

|

||||

title: "AWS (Pulumi)"

|

||||

description: "Get Activepieces up & running on AWS with Pulumi for IaC"

|

||||

---

|

||||

|

||||

# Infrastructure-as-Code (IaC) with Pulumi

|

||||

|

||||

Pulumi is an IaC solution akin to Terraform or CloudFormation that lets you deploy & manage your infrastructure using popular programming languages e.g. Typescipt (which we'll use), C#, Go etc.

|

||||

|

||||

## Deploy from Pulumi Cloud

|

||||

|

||||

If you're already familiar with Pulumi Cloud and have [integrated their services with your AWS account](https://www.pulumi.com/docs/pulumi-cloud/deployments/oidc/aws/#configuring-openid-connect-for-aws), you can use the button below to deploy Activepieces in a few clicks.

|

||||

The template will deploy the latest Activepieces image that's available on [Docker Hub](https://hub.docker.com/r/activepieces/activepieces).

|

||||

|

||||

[](https://app.pulumi.com/new?template=https://github.com/activepieces/activepieces/tree/main/deploy/pulumi)

|

||||

|

||||

## Deploy from a local environment

|

||||

|

||||

Or, if you're currently using an S3 bucket to maintain your Pulumi state, you can scaffold and deploy Activepieces direct from Docker Hub using the template below in just few commands:

|

||||

|

||||

```bash

|

||||

$ mkdir deploy-activepieces && cd deploy-activepieces

|

||||

$ pulumi new https://github.com/activepieces/activepieces/tree/main/deploy/pulumi

|

||||

$ pulumi up

|

||||

```

|

||||

|

||||

## What's Deployed?

|

||||

|

||||

The template is setup to be somewhat flexible, supporting what could be a development or more production-ready configuration.

|

||||

The configuration options that are presented during stack configuration will allow you to optionally add any or all of:

|

||||

|

||||

* PostgreSQL RDS instance. Opting out of this will use a local SQLite3 Db.

|

||||

* Single node Redis 7 cluster. Opting out of this will mean using an in-memory cache.

|

||||

* Fully qualified domain name with SSL. Note that the hosted zone must already be configured in Route 53.

|

||||

Opting out of this will mean relying on using the application load balancer's url over standard HTTP to access your Activepieces deployment.

|

||||

|

||||

For a full list of all the currently available configuration options, take a look at the [Activepieces Pulumi template file on GitHub](https://github.com/activepieces/activepieces/tree/main/deploy/pulumi/Pulumi.yaml).

|

||||

|

||||

## Setting up Pulumi for the first time

|

||||

|

||||

If you're new to Pulumi then read on to get your local dev environment setup to be able to deploy Activepieces.

|

||||

|

||||

### Prerequisites

|

||||

|

||||

1. Make sure you have [Node](https://nodejs.org/en/download) and [Pulumi](https://www.pulumi.com/docs/install/) installed.

|

||||

2. [Install and configure the AWS CLI](https://docs.aws.amazon.com/cli/latest/userguide/getting-started-install.html).

|

||||

3. [Install and configure Pulumi](https://www.pulumi.com/docs/clouds/aws/get-started/begin/).

|

||||

4. Create an S3 bucket which we'll use to maintain the state of all the various service we'll provision for our Activepieces deployment:

|

||||

|

||||

```bash

|

||||

aws s3api create-bucket --bucket pulumi-state --region us-east-1

|

||||

```

|

||||

|

||||

<Tip>

|

||||

Note: [Pulumi supports to two different state management approaches](https://www.pulumi.com/docs/concepts/state/#deciding-on-a-state-backend).

|

||||

If you'd rather use Pulumi Cloud instead of S3 then feel free to skip this step and setup an account with Pulumi.

|

||||

</Tip>

|

||||

|

||||

5. Login to the Pulumi backend:

|

||||

|

||||

```bash

|

||||

pulumi login s3://pulumi-state?region=us-east-1

|

||||

```

|

||||

6. Next we're going to use the Activepieces Pulumi deploy template to create a new project, a stack in that project and then kick off the deploy:

|

||||

|

||||

```bash

|

||||

$ mkdir deploy-activepieces && cd deploy-activepieces

|

||||

$ pulumi new https://github.com/activepieces/activepieces/tree/main/deploy/pulumi

|

||||

```

|

||||

|

||||

This step will prompt you to create you stack and to populate a series of config options, such as whether or not to provision a PostgreSQL RDS instance or use SQLite3.

|

||||

|

||||

<Tip>

|

||||

Note: When choosing a stack name, use something descriptive like `activepieces-dev`, `ap-prod` etc.

|

||||

This solution uses the stack name as a prefix for every AWS service created

|

||||

e.g. your VPC will be named `<stack name>-vpc`.

|

||||

</Tip>

|

||||

|

||||

7. Nothing left to do now but kick off the deploy:

|

||||

|

||||

```bash

|

||||

pulumi up

|

||||

```

|

||||

|

||||

8. Now choose `yes` when prompted. Once the deployment has finished, you should see a bunch of Pulumi output variables that look like the following:

|

||||

```json

|

||||

_: {

|

||||

activePiecesUrl: "http://<alb name & id>.us-east-1.elb.amazonaws.com"

|

||||

activepiecesEnv: [

|

||||

. . . .

|

||||

]

|

||||

}

|

||||

```

|

||||

|

||||

The config value of interest here is the `activePiecesUrl` as that is the URL for our Activepieces deployment.

|

||||

If you chose to add a fully qualified domain during your stack configuration, that will be displayed here.

|

||||

Otherwise you'll see the URL to the application load balancer. And that's it.

|

||||

|

||||

Congratulations! You have successfully deployed Activepieces to AWS.

|

||||

|

||||

## Deploy a locally built Activepieces Docker image

|

||||

|

||||

To deploy a locally built image instead of using the official Docker Hub image, read on.

|

||||

|

||||

1. Clone the Activepieces repo locally:

|

||||

|

||||

```bash

|

||||

git clone https://github.com/activepieces/activepieces

|

||||

```

|

||||

2. Move into the `deploy/pulumi` folder & install the necessary npm packages:

|

||||

|

||||

```bash

|

||||

cd deploy/pulumi && npm i

|

||||

```

|

||||

3. This folder already has two Pulumi stack configuration files reday to go: `Pulumi.activepieces-dev.yaml` and `Pulumi.activepieces-prod.yaml`.

|

||||

These files already contain all the configurations we need to create our environments. Feel free to have a look & edit the values as you see fit.

|

||||